Google has revealed plans to introduce a groundbreaking feature designed to assist users in grasping the creation and modification processes of specific content.

This initiative follows Google’s collaboration with the Coalition for Content Provenance and Authenticity (C2PA), an alliance of prominent organizations working diligently to curtail the proliferation of misinformation online. In addition, they contributed to establishing the recent Content Credentials standard, alongside major players like Amazon, Adobe, and Microsoft.

The new feature will roll out in the upcoming months, with Google planning to utilize existing Content Credentials guidelines—essentially image metadata—to integrate labels identifying AI-generated or altered images within its Search framework. This metadata will detail aspects such as image origin as well as creation timelines and locations.

Nonetheless, several AI developers—including Black Forest Labs, which developed the Flux model utilized by X’s (formerly known as Twitter) Grok—have rejected adopting the C2PA standard that allows users to track media origins across various formats.

Users can interact with this AI flagging system through Google's About This Image feature. Consequently, this functionality will also extend via Google Lens and Android’s ‘Circle to Search’ capability. When it becomes operational, users will have access by selecting “About this image” from a dropdown above any picture – but this may not be readily visible as anticipated.

Is This Sufficient?

Although Google’s intervention regarding AI-driven visuals in search results was necessary, skepticism remains about whether subtle labeling truly suffices. If implemented effectively, individuals could face additional steps for verifying if an image was generated through AI before receiving confirmation from Google; furthermore, those unfamiliar with these tools may remain oblivious to their existence altogether.

The issue of misleading visuals parallels high-profile incidents involving deepfake videos; consider earlier instances where a financial executive lost $25 million due to a deceptive imitation of his CFO’s likeness. Recently fabricated images also played a role when Donald Trump misleadingly shared digitally manipulated pictures featuring Taylor Swift allegedly endorsing his presidential campaign; Swift subsequently faced distressing alterations showcasing her inappropriately through viral deepfake nudes.

Criticism toward Google’s efforts is abundant; however even companies like Meta are hesitant about transparency on such matters. The social platform giant has recently altered its policy toward labeling manipulated media by making relevant identifiers less apparent within post menus.

The enhancement of Google’s ‘About This Image’ tool marks an encouraging advancement but highlights that further rigorous measures must be taken for widespread user awareness and protection against misleading content. Collaboration among more entities—such as camera manufacturers or developers integrating C2PA’s watermark features—is essential for optimizing overall efficiency within this system since it hinges heavily on accurate included data.

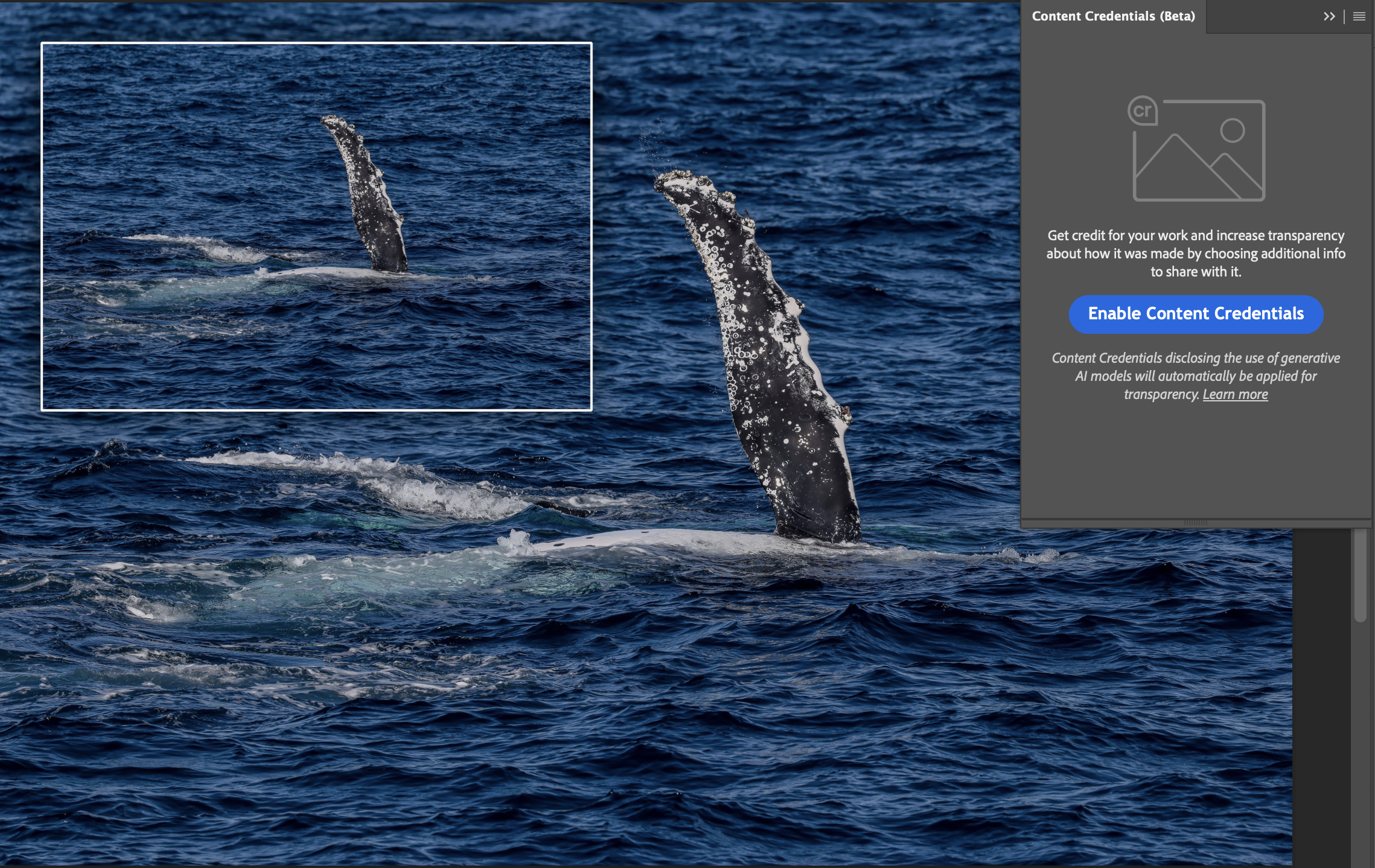

A handful of camera models including Leica M11-P or Nikon Z9 currently support built-in Content Credentials features while Adobe has initiated beta versions available in Photoshop and Lightroom software solutions yet reliance upon consumer engagement remains paramount for success without guaranteed accuracy inputted by users._

You May Also Find Interesting…

< a hrf =" https ://www.techradar.com/computing/artificial-intelligence / ai-generated-and-edited-images-will-soon-be-labeled-in-google-search-results ">Source