“`html

Examining X’s Approach to Non-Consensual Nudity: A Study Analysis

X, previously known as Twitter, asserts a strict policy against non-consensual nudity on its platform. However, a recent research study indicates that the platform is more responsive in removing such harmful content—often referred to as revenge porn or non-consensual intimate imagery (NCII)—when victims utilize the Digital Millennium Copyright Act (DMCA) takedown process instead of relying solely on X’s internal reporting system for NCII.

The Research Overview

This pre-print study, highlighted by 404 Media and yet to undergo peer review, was conducted by researchers from the University of Michigan. They aimed to evaluate the effectiveness of X’s policies regarding non-consensual nudity and illustrate the difficulties faced by victims attempting to eliminate NCII from online spaces.

Methodology of the Experiment

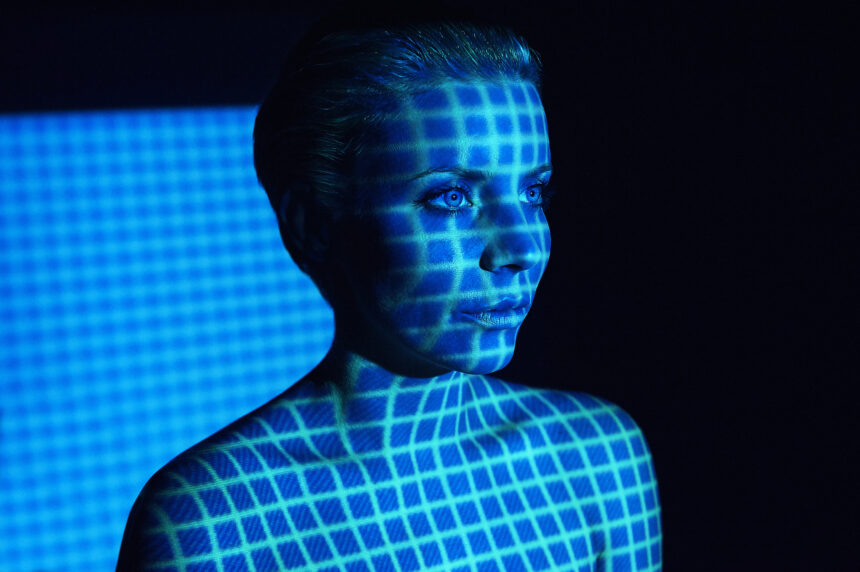

The researchers established two distinct sets of accounts on X for their experiment. They posted AI-generated images depicting “white women appearing to be in their mid-20s to mid-30s,” shown nude from the waist up while including facial features. The choice of white women was made intentionally “to reduce potential biases in treatment outcomes,” with suggestions for future studies involving diverse genders and ethnicities.

Out of 50 fabricated AI nude images shared on X, half were reported under X’s policy against non-consensual nudity while the other half were flagged using DMCA takedown requests.

Conclusion and Implications

This research sheds light on significant gaps within social media platforms’ handling of sensitive content like NCII. It raises critical questions about how effectively these platforms protect users and respond when violations occur.

Read more about this study here

“`