“`html

In March 2024, we highlighted the innovative efforts of British AI startup Literal Labs, which is striving to render GPU-based training obsolete through its groundbreaking Tseltin Machine. This machine learning model employs logic-driven learning techniques for data classification.

The Tseltin Machine utilizes Tsetlin automata to forge logical relationships between input data features and their corresponding classification rules. The system dynamically adjusts these connections based on the accuracy of its decisions, employing a reward-and-penalty mechanism.

This methodology, conceived by Soviet mathematician Mikhail Tsetlin in the 1960s, diverges from traditional neural networks by emphasizing learning automata instead of mimicking biological neurons for tasks such as classification and pattern recognition.

Design Focused on Energy Efficiency

Recently, with support from Arm, Literal Labs has introduced a compact model utilizing Tsetlin Machines that measures merely 7.29KB yet achieves remarkable accuracy while significantly enhancing anomaly detection capabilities for edge AI and IoT applications.

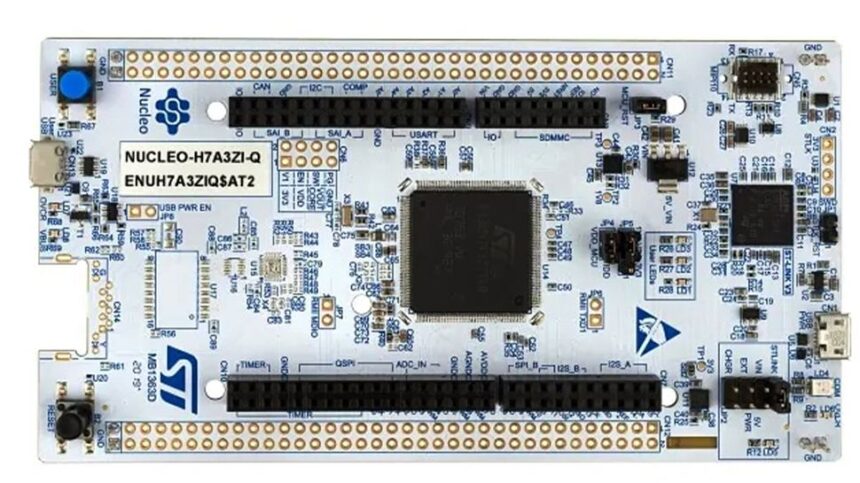

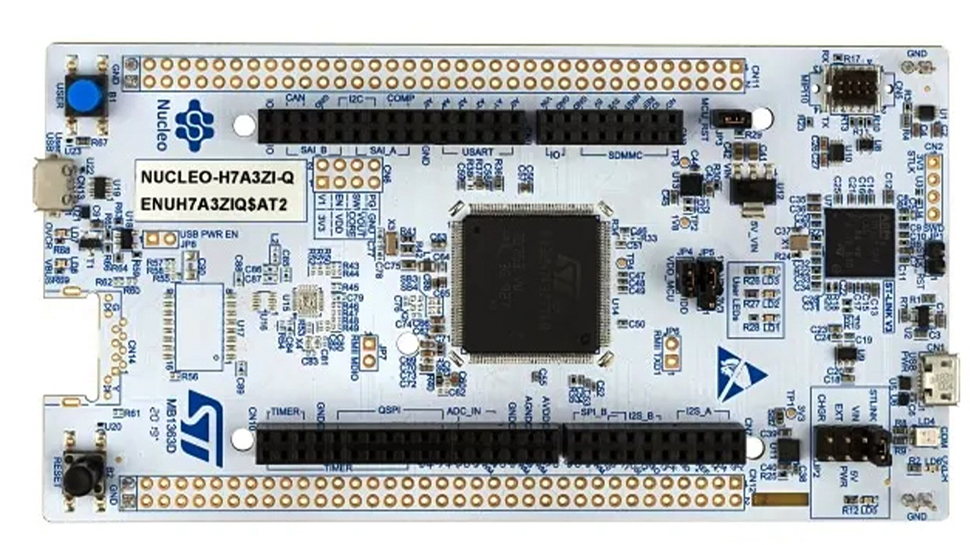

The performance of this model was evaluated using the MLPerf Inference: Tiny benchmark suite on an economical $30 NUCLEO-H7A3ZI-Q development board, equipped with a 280MHz ARM Cortex-M7 processor without an AI accelerator. The findings reveal that Literal Labs’ model achieves inference speeds that are 54 times quicker than conventional neural networks while consuming only one-fiftieth (52 times less) energy.

When compared to leading models in the industry, Literal Labs’ design showcases significant improvements in latency alongside its energy-efficient architecture. This makes it ideal for low-power devices such as sensors. Its exceptional performance positions it well for use cases in industrial IoT applications, predictive maintenance strategies, and health diagnostics where rapid and precise anomaly detection is essential.

The introduction of such a compact and energy-conserving model could facilitate broader AI adoption across multiple industries by lowering costs and enhancing access to advanced technology.

Literal Labs states: “Compact models are especially beneficial in these scenarios since they demand less memory and processing power. This allows them to operate on more cost-effective hardware with lower specifications. Consequently, this not only minimizes expenses but also expands the variety of devices capable of supporting sophisticated AI functionalities—making large-scale deployment feasible even in resource-limited environments.”

Additional Insights from TechRadar Pro

- The top-rated AI tools available today

- This startup aims to eliminate GPU training with revolutionary technology

- A recent report indicates storage challenges surpass those posed by GPUs in AI development

Source

“`